Generative Answering FAQ

Scaling Generative AI projects across an enterprise comes with several challenges. A common issue is the fragmentation caused by multiple teams running siloed proof-of-concept (POC) projects. These disconnected efforts often lead to duplicated work, inconsistent data pipelines, and fractured knowledge bases.

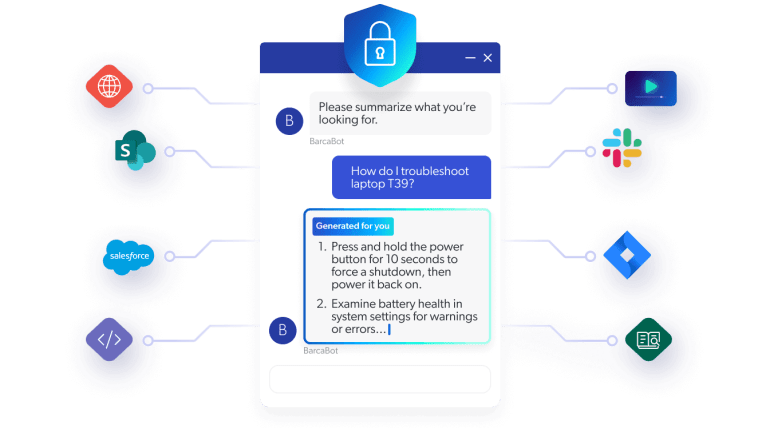

Security and compliance are also significant hurdles, as enterprise data requires strict access controls and must adhere to regulatory standards—making it cumbersome to create and maintain secure pipelines.

Additionally, integrating diverse data sources with varying formats and APIs is complex, requiring ongoing maintenance to prevent stale data or connection issues.

Finally, achieving scalability is difficult, as production systems must handle millions of users and documents while maintaining high performance and reliability.

Enterprise Reality: Deploying GenAI for real business use cases is far more complex than a polished proof-of-concept or quick demonstration. While many have invested in training their own LLMs, security, scalability, and data quality are ongoing concerns.

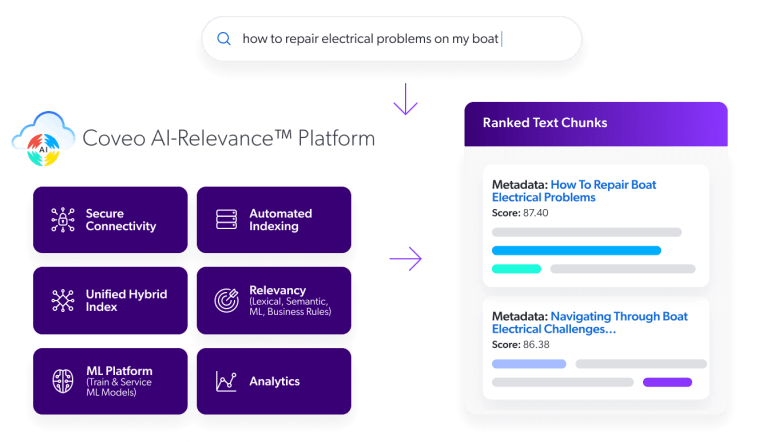

The Retrieval Gap: Retrieval Augmented Generation (RAG) is widely touted as the way to ground LLMs in an organization’s data, but information retrieval is harder than organizations expect. Many teams underestimate the operational overhead of connecting to, securing, and unifying data sources at scale and maintaining them.

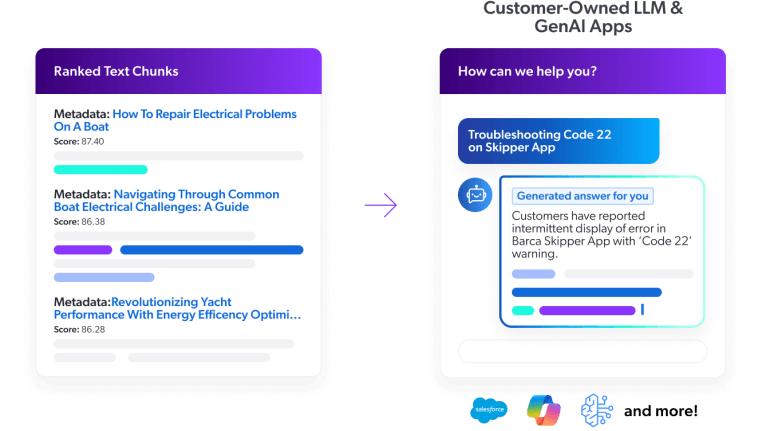

Retrieval is essential because it ensures Generative AI applications are grounded in accurate, timely, and relevant data. While creating prompts or selecting a large language model (LLM) can be relatively straightforward, feeding the LLM with high-quality data is far more challenging. If the retrieval system is flawed—due to stale data, poor search relevance, or fragmented sources—the AI outputs will be unreliable, leading to “garbage in, garbage out” scenarios. Coveo addresses this by indexing data across silos, ranking results with AI, and enforcing strict security measures, ensuring the LLM receives the best possible information. This robust retrieval foundation is what enables scalable and reliable generative AI applications.

Deciding how much of your generative AI experiences to build varies by customer and use case. It’s common for organizations to deploy a combination of custom and out-of-the-box generative answering solutions.

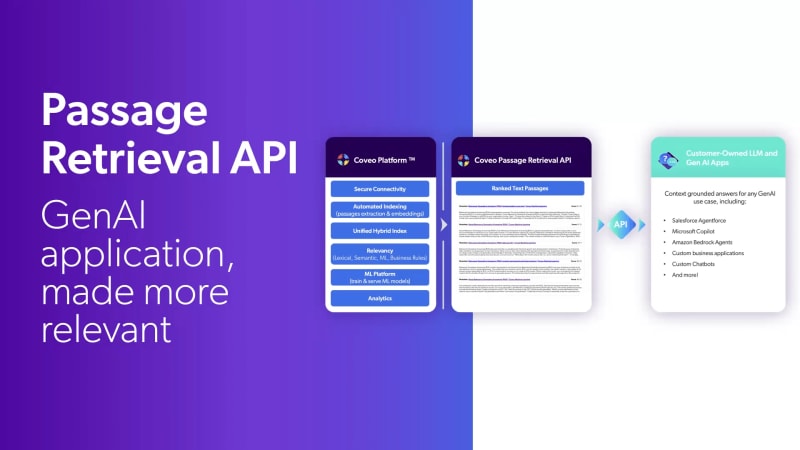

The reasons for building custom generative AI applications are often to control the front-end user interface, to use a built-for-purpose or enterprise-trained LLM, to tie into a chatbot or agentic workflow, or for more granular control over the prompt engineering. In all of these cases, the information retrieval portion of RAG is the most difficult to create and maintain; this is where the Coveo Passage Retrieval API comes in.

If you’re looking for an out-of-the-box managed solution for service, website, workplace and commerce use cases, check out Coveo Relevance Generative Answering. This product leverages the same retrieval API but offers a plug-and-play generative answering experience with exceptional time-to-value.

To unify and scale Gen AI projects, consolidate efforts around a single, secure information retrieval system. Fragmented POCs often lead to duplicated work, fractured data, and inconsistent security. A robust platform like Coveo centralizes retrieval, indexing data across silos while ensuring secure, relevant, and scalable access. By grounding your LLMs with reliable retrieval, you streamline operations and enable consistent, enterprise-wide AI deployment.

Scalability comes from proven, enterprise-grade technology built for high volumes and complexity. Coveo’s AI-driven retrieval engine ensures secure, compliant, and reliable performance for millions of documents and users. With plug-and-play generative answers and custom retrieval APIs, partnering with Coveo reduces development time, cuts costs, and accelerates ROI of retrieval augmented generation projects, delivering impactful GenAI solutions in weeks.